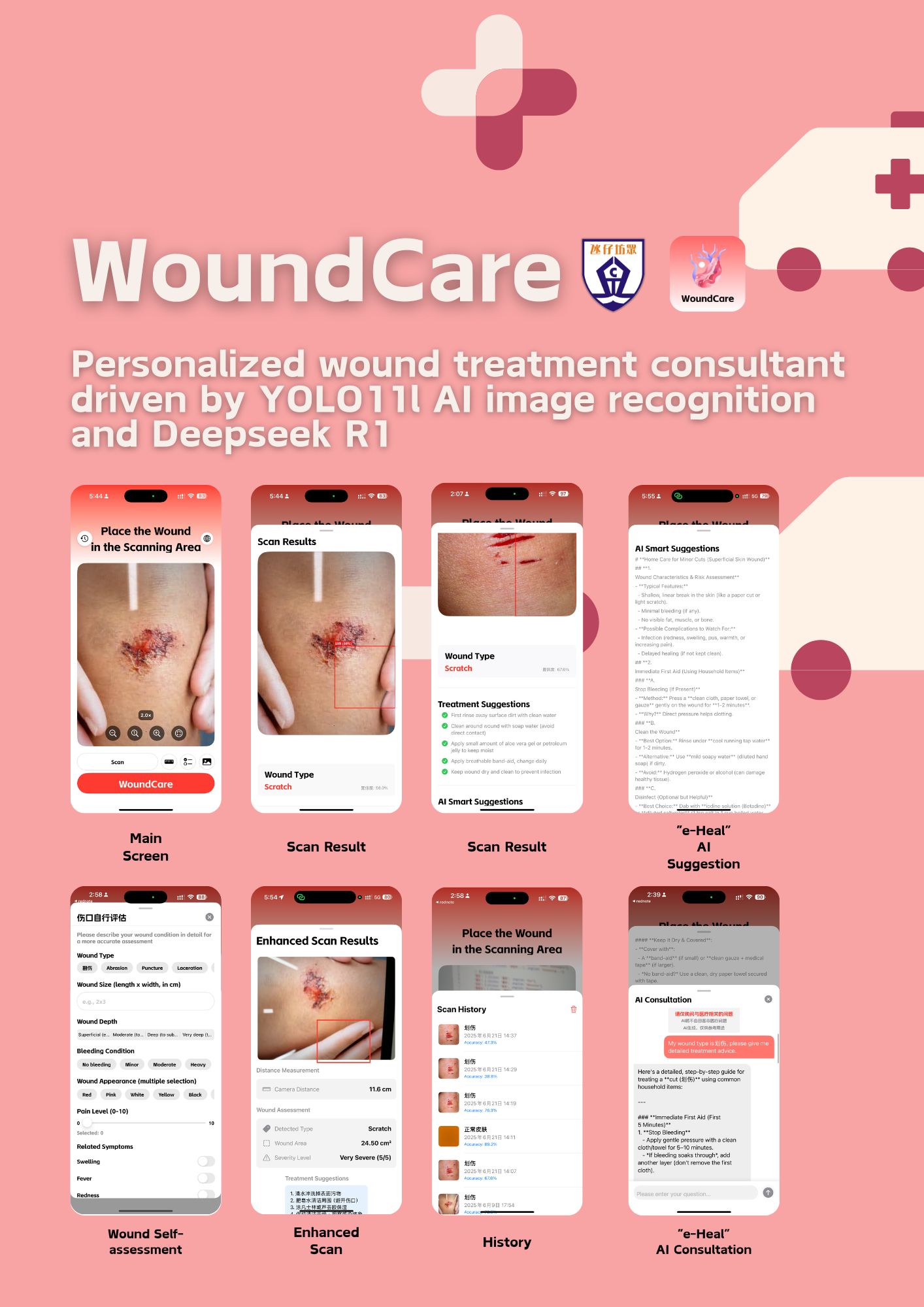

WoundCare : Personalized wound treatment consultant driven by YOLO11l AI image recognition and Deepseek R1

Description: WoundCare is a personalized wound treatment consultancy utilizing AI image recognition. This system offers real-time wound identification and treatment solutions, delivering enhanced medical support to regions with limited healthcare resources. Through WoundCare, local residents can address wounds more accurately and efficiently, thereby effectively minimizing unnecessary waste of medical resources and alleviating the medical burden.

WoundCare is an innovative solution designed to address the widespread issue of unintentional injuries and improper wound management, especially in environments where immediate professional medical care is not available. Injuries frequently occur in everyday settings such as homes, streets, and sports venues, and without correct and prompt treatment, wounds can develop serious complications like infection, delayed healing, or even systemic threats such as sepsis. This risk is particularly high in regions with limited access to healthcare resources, making accessible wound care solutions essential for public health. Traditional wound assessment methods rely heavily on visual inspection, standardized scales, and manual measurements, which can be subjective and prone to error. Contact-based approaches may also disrupt the wound environment and increase the risk of infection. Studies have shown that traditional visual assessments can have error rates as high as 15%–20% and are often inadequate for detecting deep tissue injuries. In contrast, recent advances in artificial intelligence and image analysis have enabled more accurate and automated wound assessment, improving diagnostic efficiency and reducing the burden on healthcare systems. WoundCare consists of a mobile application and a physical device, both offering similar functionalities. The core of the system is an artificial intelligence model based on the YOLO11 architecture, which is renowned for its high accuracy and efficient real-time image processing. The application allows users to capture images of wounds using their smartphone camera or upload existing photos. Within approximately 1.5 seconds, the system identifies the wound type and provides appropriate treatment recommendations in either voice or text format. The application is compatible with both iOS and Android devices, ensuring broad accessibility. A key feature of WoundCare is its integration with DeepSeek, a large language model that enables interactive, situation-specific treatment guidance when the device is connected to the internet. Users can engage with the virtual assistant to ask questions about wound types, care methods, and receive tailored diagnostic and treatment suggestions based on their specific situation. This integration enhances the depth and relevance of the advice provided, especially in complex or ambiguous cases. For iOS devices equipped with LiDAR sensors, WoundCare leverages ARKit to perform high-precision distance detection. By combining depth data with the wound’s dimensions detected in the image, the application can calculate the physical area of the wound, which is crucial for assessing severity. This feature ensures that measurement errors remain below 5%, supporting more accurate and automated severity assessments. The development of WoundCare involved compiling a comprehensive dataset of wound images. The team collected 7,668 original images, categorized into six wound types plus normal skin. Data augmentation techniques, such as angle adjustments, flips, brightness changes, and the addition of Gaussian noise, expanded the dataset to 45,271 images. This extensive and diverse dataset enabled the YOLO11 model to generalize well and achieve robust performance across various wound types and imaging conditions. The model was trained on an NVIDIA A100 GPU for 100 epochs, resulting in an average wound classification accuracy exceeding 80% on independent test sets. WoundCare’s user interface is designed for simplicity and accessibility. Upon launching the app, users are prompted to grant necessary permissions and select their preferred language. The main interface allows for single-tap scanning, image upload analysis, or a questionnaire-based self-assessment. Results are displayed clearly, with annotated images and concise treatment advice. The application also maintains a history of scans and provides notifications to keep users informed of their wound status and recommendations. The application’s architecture is optimized for both speed and accuracy. On iOS, the model loads at app launch, while on Android, it loads after capturing a photograph. The iOS version is developed in Swift, and the Android version in Kotlin, ensuring that each platform benefits from native performance and integration with system features. WoundCare’s performance has been thoroughly validated. The YOLO11 model consistently achieves an average accuracy rate above 80% for wound type identification, and the LiDAR-based area measurement maintains error rates below 5%. The application delivers wound identification and treatment recommendations in under two seconds, even in offline mode, ensuring that users can access timely assistance regardless of their connectivity status. When online, the integration with DeepSeek provides enhanced diagnostic depth and interactive support. The project’s outcomes demonstrate the feasibility and effectiveness of combining advanced object detection, depth sensing, and generative AI to democratize reliable wound assessment. WoundCare empowers users to manage wounds more effectively, reducing the risk of complications and improving health outcomes, particularly in resource-limited settings. The solution is user-friendly, rapid, accurate, and safe, embodying the core values of modern healthcare technology. In conclusion, WoundCare represents a significant advancement in the intersection of artificial intelligence and healthcare. By providing accessible, real-time wound assessment and treatment guidance, it addresses a critical gap in first aid and emergency care. The project underscores the importance of integrating technology with healthcare to create safer and healthier environments for everyone. As WoundCare continues to evolve, it is poised to become a standard tool in first aid, contributing to improved health outcomes and reduced disparities in wound care access around the world.

Organisation: Fong Chong School of Taipa, Macau

Innovator(s): FONG KUN FAI , ZENG QIAO HUI

Category: Healthcare/Fitness

Country: Macau SAR